Abstract

The double-factored decision theory for Markov decision processes with multiple scenarios of the parameters is proposed in this article. We introduce scenario belief to describe the probability distribution of scenarios in the system, and scenario expectation to formulate the expected total discounted reward of a policy. We establish a new framework named as double-factored Markov decision process (DFMDP), in which the physical state and scenario belief are shown to be the double factors serving as the sufficient statistics for the history of the decision process. Four classes of policies for the finite horizon DFMDPs are studied and it is shown that there exists a double-factored Markovian deterministic policy which is optimal among all policies. We also formulate the infinite horizon DFMDPs and present its optimality equation in this paper. An exact solution method named as double-factored backward induction for the finite horizon DFMDPs is proposed. It is utilized to find the optimal policies for the numeric examples and then compared with policies derived from other methods from the related literatures.

Similar content being viewed by others

References

Puterman, M.L.: Markov Decision Processes: Discrete Stochastic Dynamic Programming, 2nd edn. Wiley, New Jersey (2005)

Beemsterboer, B.J., Land, M.J., Teunter, R.H.: Flexible lot sizing in hybrid make-to-order/make-to-stock production planning. Eur. J. Oper. Res. 260(3), 1014–1023 (2017)

Chen, N., Teven, K., Wang, C.: A partitioning algorithm for markov decision processes with applications to market microstructure. Manag. Sci. 64(2), 784–803 (2018)

Steimle, L.N., Kaufman, D.L., Denton, B.T.: Multi-model Markov decision processes. IISE Trans. 53(10), 1124–1139 (2022)

Buchholz, P., Scheftelowitsch, D.: Computation of weighted sums of rewards for concurrent MDPs. Math. Methods Oper. Res. 89(1), 1–42 (2019)

Givan, R., Leach, S., Dean, T.: Bounded-parameter Markov decision processes. Artif. Intell. 122(1–2), 71–109 (2000)

Delgado, K.V., Sanner, S., de Barros, L.N.: Efficient solutions to factored MDPs with imprecise transition probabilities. Artif. Intell. 175(9–10), 1498–1527 (2011)

Witwicki, S.J., Melo, F.S., Capitan, J., Spaan, M.T.J.: A flexible approach to modeling unpredictable events in MDPs. In: Proceedings of Twenty-Third International Conference on Automated Planning and Scheduling ICAPS2013, pp. 260–268 (2013)

Duff, M.: Optimal learning: computational procedures for bayes-adaptive markov decision processes. Ph.D. thesis, University of Massachusetts Amherst, Amherst, MA (2002)

Castro, P. S., Precup, D.: Using linear programming for Bayesian exploration in Markov decision processes. In: International Joint Conference on Artificial Intelligence IJCAI2007, pp. 2437–2442 (2007)

Kumar, P.: Information theoretic learning methods for Markov decision processes with parametric uncertainty. Ph.D. thesis, University of Washington (2018).

Iyengar, G.: Robust dynamic programming. Math. Oper. Res. 30(2), 257–280 (2005)

Nilim, A., El Ghaoui, L.: Robust control of Markov decision processes with uncertain transition matrices. Oper. Res. 53(5), 780–798 (2005)

Delgado, K.V., de Barros, L.N., Dias, D.B., Sanner, S.: Real-time dynamic programming for Markov decision process with imprecise probabilities. Artif. Intell. 230(8), 192–223 (2016)

Moreira, D.A.M., Delgado, K.V., de Barros, L.N.: Robust probabilistic planning with ilao. Appl. Intell. 45(3), 662–672 (2016)

Delage, E., Shie, M.: Percentile optimization for markov decision processes with parameter uncertainty. Oper. Res. 58(1), 203–213 (2010)

Adulyasak, Y., Varakantham, P., Ahmed, A., Jaillet, P.: Solving uncertain MDPs with objectives that are separable over instantiations of model uncertainty. In: Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, Texas, AAAI Press, pp. 3454–3460 (2015)

Ahmed, A., Varakantham, P., Lowalekar, M., Adulyasak, Y., Jaillet, P.: Sampling based approaches for minimizing regret in uncertain Markov decision processes (MDPs). J. Artif. Intell. Res. 59, 229–264 (2017)

Meraklı, M., Küçükyavuz, S.: Risk aversion to parameter uncertainty in Markov decision processes with an application to slow-onset disaster relief. IISE Trans. 52(8), 811–831 (2019)

Shani, G., Heckerman, D., Brafman, R.: An MDP-based recommender system. J. Mach. Learn. Res. 6(43), 1265–1295 (2005)

Chen, Q., Ayer, T., Chhatwal, J.: Sensitivity analysis in sequential decision models: a probabilistic approach. Med. Decis. Making 37(2), 243–252 (2017)

Bala, M.V., Mauskopf, J.A.: Optimal assignment of treatments to health states using a Markov decision model. Pharmacoeconomics 24(4), 345–354 (2006)

Acknowledgements

The author is grateful to the editors and reviews for their dedicated inputs and supports during the review process. The author would like to thank his advisor Prof. Theodore Allen from Systems Engineering of The Ohio State University, for his continuous support and guidance. The author also wants to express his sincere appreciation to Prof. Nick Hall from Fisher Business School of The Ohio State University for his valuable suggestions on this work.

Author information

Authors and Affiliations

Contributions

C.-J. Hou contributed to all the elements involved in this paper, including framework conceptualization, algorithm design, mathematical proof, and numeric validation.

Corresponding author

Ethics declarations

Competing Interests

The author declares that there are no competing interests related to the research work and the writing of this paper.

Additional information

This research was originally supported by the (United States) National Science Foundation (No. 1409214).

Appendix

Appendix

1.1 Proof of Theorem 1

For any given \({h}_{0}=\langle {s}_{0},{{\varvec{b}}}_{{s}_{0}}\rangle \in S\times {\Delta }^{C}\) and a fixed π ∊ ΠDHD, the history sets \({H}_{t}^{\pi }\) for t = 0,1, ···, Z are determined by step 1 in the policy evaluation algorithm. Equation (22) shows that when the history up to time t is \(h_{t} \in H_{t}^{\pi }\), the expected value of policy π at decision epoch t, t + 1, ···, Z is equal to the reward \({\overline{r} }_{{s}_{t}}^{{x}_{t}({h}_{t})}\) received by selecting action xt(ht) plus the expected total discounted reward over the remaining periods. The second term contains the product of \({\overline{p} }_{{s}_{t},{s}_{t+1}}^{{x}_{t}({h}_{t})}\) the probability of state transiting from st to st+1 when action xt(ht) is performed at decision epoch t, and \({U}_{\mathrm{t}+1}^{\pi }\) the expected total discounted reward obtained by applying π at decision epoch t + 1, ···, Z when the history up to time t + 1 is \({h}_{t+1}=\left({h}_{t},{x}_{t}({h}_{t}),\langle {s}_{t+1},{{\varvec{b}}}_{{s}_{t+1}}\rangle \right)\). Summing over all possible states st+1 gives the desired expectation expressed in terms of \({U}_{\mathrm{t}+1}^{\pi }\). So Eq. (22) can be written as below

The rest of the proof is by backward induction where the index of induction is t. It is obvious that (21) holds when t = Z. Suppose now that (21) holds for t + 1, ···, Z. Then by using (A1) and the induction hypothesis, we have

It is true that (21) holds for t. Therefore, \({V}^{\pi }\left({s}_{0},{{\varvec{b}}}_{{s}_{0}}\right)={U}_{0}^{\pi }\left({h}_{0}\right)\) when t = 0.

1.2 Proof of Theorem 3

The proof is in two parts. Firstly, we establish by induction that \({U}_{t}\left({h}_{t}\right)\geqslant {U}_{t}^{*}\left({h}_{t}\right)\) for all ht \(\in\) Ht and t = 0,1, ···, Z. Obviously, \({U}_{Z}\left({h}_{Z}\right)={\overline{r} }_{{s}_{Z}}^{0}={U}_{Z}^{\pi }\left({h}_{Z}\right)\) for all hZ \(\in\) HZ and π \(\in\) ΠDHR. Therefore \({U}_{Z}\left({h}_{Z}\right)={U}_{Z}^{*}\left({h}_{Z}\right)\) for all hZ \(\in\) HZ. Now assume that \({U}_{t}\left({h}_{t}\right)\geqslant {U}_{t}^{*}\left({h}_{t}\right)\) for all \(h_{t} \in H_{t}\) for t = n + 1, ···, Z. Let \(\pi^{\prime } = \left( {x_{0}^{\prime } ,x_{1}^{\prime } ,\cdots,x_{Z - 1}^{\prime } } \right)\) be an arbitrary policy in ΠDHR. So for t = n, we have

Line (A2) holds because of the induction hypothesis and non-negativity of \(\overline{{\varvec{p}} }\). Line (A3) holds because of the definition of \({U}_{n+1}^{*}\). Line (A4) follows from Lemma 1. The last equality follows from (23) and Theorem 2.

Because \({\pi}'\) is arbitrary, we have

for all π \(\in\) ΠDHR. Thus \({U}_{t}\left({h}_{t}\right)\geqslant {U}_{t}^{*}\left({h}_{t}\right)\) and the induction hypothesis holds. The first part finishes.

For the second part, we establish that for any ε > 0, there always exists a \({\pi}' {\in}\) ΠDHD so that

for all ht \(\in\) Ht and t = 0,1, ··· , Z. Since \(U_{Z}^{{\pi^{\prime } }} \left( {h_{Z} } \right) = U_{Z} \left( {h_{Z} } \right) = \overline{r}_{{s_{Z} }}^{0}\), the induction hypothesis holds for t = Z. Assuming that \(U_{t}^{{\pi^{\prime } }} \left( {h_{t} } \right) + \left( {Z - t} \right)\varepsilon \geqslant U_{t} \left( {h_{t} } \right)\) for t = n + 1, ···, Z, we have

The second line in (A6) holds because of the induction hypothesis and ε > 0. Thus the inductive hypothesis is satisfied and (A5) holds for t = 0,1, ···, Z.

Thus for any ε > 0, there exists a \({\pi}'\) \(\in\) ΠDHR for which

then part (i) in the theorem holds. Part (ii) in the theorem holds because

1.2.1 Proof of Theorem 4

For t = Z, clearly \({U}_{Z}^{{\pi }^{*}}\left({h}_{Z}\right)={U}_{Z}^{*}\left({h}_{Z}\right)\) for all hZ \(\in\) HZ. Assume argument in part (i) in the theorem is true for t = n + 1, ···, Z. Then, for t = n and \({h}_{n}=\left({h}_{n-1},{x}_{n-1}^{*}({h}_{n-1}),\langle {s}_{n},{{\varvec{b}}}_{{s}_{n}}\rangle \right)\),

Thus the induction hypothesis is true. Part (ii) in the theorem follows from Theorem 1 and Theorem 3-(ii).

1.3 Proof of Theorem 6

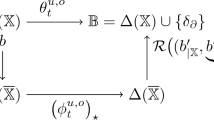

We show that (i) holds by induction. Since \({U}_{Z}^{*}\left({h}_{Z}\right)={U}_{Z}^{*}\left({h}_{Z-1},{a}_{Z-1},\langle {s}_{Z},{{\varvec{b}}}_{{s}_{Z}}\rangle \right)={\overline{r} }_{{s}_{Z}}^{0}\) for all hZ–1 \(\in\) HZ–1 and aZ–1 \(\in\) A, \({U}_{Z}^{*}\left({h}_{Z}\right)={U}_{Z}^{*}\left({s}_{Z},{{\varvec{b}}}_{{s}_{Z}}\right)\). Assume now that (i) is true for t = n + 1, ···, Z. Then let t = n. For any \({h}_{n}=\left({h}_{n-1},{a}_{n-1},\langle {s}_{n},{{\varvec{b}}}_{{s}_{n}}\rangle \right)\in {H}_{n}\), it follows from (24), the induction hypothesis and (14) that

Because the quantities within brackets in the last line of (A7) depends on hn only through \(\langle {s}_{n},{{\varvec{b}}}_{{s}_{n}}\rangle\), we have \({U}_{n}^{*}\left({h}_{n}\right)={U}_{n}^{*}\left({s}_{n},{{\varvec{b}}}_{{s}_{n}}\right)\). Thus part (i) in the theorem is true for t = 0,1, ··· , Z.

When S and A are finite, there exists a policy \(\pi^{*} = \left( {x_{0}^{*} ,x_{1}^{*},\,\dots\,,x_{Z - 1}^{*} } \right) \in {\Pi }^{{{\text{DMD}}}}\) derived from

Therefore, by part (i) in the theorem and Theorem 4-(ii), \({\pi }^{*}\) is optimal.

1.4 Proof of Theorem 7

We will show that \({W}^{\pi }\left({s}_{0},{{\varvec{b}}}_{{s}_{0}}\right)\) in (4) is equal to \({V}^{\pi }({s}_{0},{{\varvec{b}}}_{{s}_{0}})\) in (16). Firstly, we provide another calculation of scenario belief \({{\varvec{b}}}_{{s}_{t}}\) in state st at decision epoch t. For any \(h_{0} = \langle s_{0} ,{\varvec{b}}_{{s_{0} }} \rangle \in S \times {\Delta }^{C}\), using history recursion \({h}_{t}=({h}_{t-1},{a}_{t-1},\langle {s}_{t},\tau (\langle {s}_{t-1},{{\varvec{b}}}_{{s}_{t-1}}\rangle ,{a}_{t-1},{s}_{t})\rangle )\) repeatedly, the belief \({{\varvec{b}}}_{{s}_{t}}\) within \(\langle {s}_{t},{{\varvec{b}}}_{{s}_{t}}\rangle\) in ht \(\in\) Ht can be expressed by

where s0, a0, s1, ···, at−1, st are the state realizations and actions taken up to t.

For a fixed policy π \(\in\) ΠDHD, starting from the boundary condition \({U}_{Z}^{\pi }\left({h}_{Z}\right)={\overline{r} }_{{s}_{Z}}^{0}\), we evaluate recursively \({U}_{t}^{\pi }\) for \(t=Z-1,\cdots,1,0\) by (22), that is,

Submitting (14) into the last equation in (A9) and using (A8), we have

On the other hand, Steimle et al. [4] infer that there are no optimal policies that are Markovian for the problem in (5). Then, we consider the history-dependent deterministic policies of standard MDPs, \(\pi = \left\{ {x_{t} \left( {h_{t} } \right):h_{t} \in S \times A \times \cdots \times A \times S,t \in T} \right\}\). Using the finite horizon policy evaluation algorithm presented in [1] and the same logic as in (A9), we obtain \({V}_{k}^{\pi }\left({s}_{0}\right)\) in (3) as follows

It holds from (4), (A11) and (A10) that

Then it follows from (5) and (29) that the finite horizon DFMDP is equivalent to the WVP of the multi-scenario MDP.

1.5 Proof of Theorem 8

For a given \(\langle {s}_{0},{{\varvec{b}}}_{{s}_{0}}\rangle\), (10) becomes

since p1 = … = p|C|= p0. This implies that the all scenario beliefs for any state at any time are constant and equal to \({{\varvec{b}}}_{{s}_{0}}\). As a result, \(\overline{r}_{{s_{t} }}^{{a_{t} }} = \mathop \sum \nolimits_{k \in C} b_{{s_{0} ,k}} r_{{s_{t} ,k}}^{{a_{t} }} ,\forall\, s_{t} \in S,a_{t} \in A,t \in T\), i.e., \(\overline{\varvec{r}} = \mathop \sum \nolimits_{k \in C} b_{{s_{0} ,k}} {\varvec{r}}_{k}\) which means that the scenario expected reward does not change over time. Therefore, the DFMDP with the type-I of scenarios can be reduced to a standard MDP with parameter pair (\({{\varvec{p}}}_{0}\),\(\overline{{\varvec{r}} }\)).

1.6 Proof of Theorem 9

Firstly, we will prove that H is an isotone mapping. Let V, U \(\in\) \(\mathcal{V}\) and U \(\geqslant\) V. Suppose that

then for any \(\langle s,{{\varvec{b}}}_{s}\rangle \in S\times {\Delta }^{C}\),

It shows that HU \(\geqslant\) HV when U \(\geqslant\) V. So H is an isotone mapping.

Then we will prove that for all \({\text{V}}\), U \(\in\) \({\mathcal{V}}\), that \(\left \| HV-HU \right \| \leqslant \gamma \left \| V-U \right \|\) is true for any 0 < γ < 1. Let V, U \(\in\) \({\mathcal{V}}\) and HV(s, bs) \(\geqslant\) HU(s, bs) for a fixed \(\langle s,{{\varvec{b}}}_{s}\rangle \in S\times {\Delta }^{C}\). Again, suppose that

Then

If we assume HU(s, bs) \(\geqslant\) HV(s, bs), the same logic will imply that

for any \(\langle s,{{\varvec{b}}}_{s}\rangle \in S\times {\Delta }^{C}\). This results in \(\left \| \, HV-HU \right \| \leqslant \gamma \left \| V-U \right \|\) So H is a contraction mapping.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hou, CJ. Double-Factored Decision Theory for Markov Decision Processes with Multiple Scenarios of the Parameters. J. Oper. Res. Soc. China (2023). https://doi.org/10.1007/s40305-023-00457-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40305-023-00457-5

Keywords

- Dynamic programming

- Markov decision process

- Parameter uncertainty

- Multiple scenarios of the parameters

- Double-factored Markov decision process